The American business landscape is vibrating with a new kind of energy. In boardrooms from Silicon Valley to Wall Street, in manufacturing plants across the Midwest, and in healthcare systems from coast to coast, a single, transformative conversation is taking place: how to harness the power of Artificial Intelligence (AI). The promise is immense—unprecedented efficiency, hyper-personalized customer experiences, breakthrough product innovation, and a competitive edge that could define the next decade.

Yet, beneath the surface of this excitement lies a critical, often overlooked, and decidedly unglamorous truth. The AI revolution is not, at its core, an algorithm revolution. It is a data revolution. The most sophisticated AI model in the world, built on the latest research, is rendered useless—or worse, dangerously misleading—without the right fuel: high-quality, well-managed, and strategically aligned data.

For US organizations, this presents a unique moment of reckoning. The question is no longer if you should adopt AI, but how you can build a foundation that makes AI adoption successful, scalable, and trustworthy. This article delves into the essential components of an AI-ready data strategy from a US perspective, examining the unique challenges, regulatory environment, and strategic imperatives that American businesses must confront to thrive in this new era.

The AI Tidal Wave: More Than Just Hype

To understand the urgency, one must appreciate the fundamental shift that modern AI, particularly generative AI, represents. Previous waves of analytics and “small AI” (focused on specific tasks like recommendation engines or fraud detection) were complex, but they operated on a more manageable scale. They often relied on structured data from internal systems—sales records, customer databases, transaction logs.

Generative AI and large language models (LLMs) are different. They are data-hungry behemoths. Their performance is directly correlated to the volume, variety, and veracity of the data they are trained on and the data they process. They can consume unstructured data—emails, documents, video, audio, social media feeds—at a scale previously unimaginable. This capability is what makes them so powerful, but it also exposes every weakness in an organization’s data infrastructure.

The direct implication for your data strategy is this: the scope of data you must now manage has exploded. What was once a project to clean up your customer relationship management (CRM) system is now a strategic imperative to govern every piece of information your company creates or touches.

The Pillars of an AI-Ready Data Strategy

An AI-ready data strategy is not a minor tweak to existing practices. It is a foundational overhaul built on four interconnected pillars. Neglecting any one of them introduces significant risk and limits the potential return on AI investments.

Pillar 1: Data Quality and Integrity – The “Garbage In, Gospel Out” Problem

The old adage “garbage in, garbage out” has never been more consequential. With AI, the output isn’t just a flawed report; it can be a confidently written but entirely fabricated legal brief, a discriminatory hiring recommendation, or a wildly inaccurate financial forecast. AI models, especially LLMs, can amplify and obscure data errors in ways that are difficult to detect.

Key Components for the US Context:

- Data Profiling and Cleansing: This is the non-negotiable first step. US companies must implement automated tools to continuously scan data for inaccuracies, inconsistencies, duplicates, and missing values. This is not a one-time project but an ongoing process.

- Standardization and Unification: With data pouring in from myriad sources—legacy on-premise systems, cloud applications, IoT devices, third-party vendors—creating a single source of truth is paramount. This means enforcing standardized formats for critical data elements like customer names, addresses, and product codes.

- Bias Detection and Mitigation: For US organizations, this is both an ethical and a legal imperative. Historical data often contains societal biases. An AI model trained on biased hiring data will perpetuate discrimination, opening the company to litigation and reputational damage under laws like the Civil Rights Act and evolving state-level AI regulations. Proactive steps must be taken to audit data for bias and employ techniques to mitigate it.

Pillar 2: Data Governance and Security – Trust in an Age of Scrutiny

Data is a strategic asset, and like any valuable asset, it requires robust governance and security. A lax approach to data governance was always risky, but with AI, the stakes are exponentially higher. The US regulatory environment is rapidly evolving, and public trust is fragile.

Key Components for the US Context:

- A Federated Governance Model: Centralized, IT-heavy governance models are too slow for the AI era. A federated model empowers business units (the “data citizens”) to use data responsibly within a centralized framework of policies and standards set by a central data governance council. This balances agility with control.

- Clear Data Lineage and Provenance: You must be able to trace any piece of data, and any AI-generated insight, back to its origin. This “data lineage” is critical for debugging model errors, ensuring regulatory compliance, and building trust in AI outputs. If a model recommends a multi-million dollar investment, the board has a right to know what data that recommendation was based on.

- Robust Security and Privacy Protocols: The consequences of a data breach are magnified when that data is used to train core AI models. Adherence to frameworks like the NIST Cybersecurity Framework and compliance with regulations like HIPAA (for health data), GLBA (for financial data), and a growing patchwork of state laws, like the California Consumer Privacy Act (CCPA), is essential. Data must be encrypted at rest and in transit, and access must be strictly controlled via role-based permissions.

Pillar 3: Data Architecture and Infrastructure – The Engine Room

You cannot run a Formula 1 car on a go-kart track. Similarly, you cannot support enterprise AI on a fragmented, legacy data architecture. The ability to collect, store, process, and serve data at high velocity and low latency is the bedrock of AI innovation.

Key Components for the US Context:

- The Rise of the Data Lakehouse: Many US companies are caught between data warehouses (great for structured data, but expensive and rigid) and data lakes (great for storing all data, but often become unusable “data swamps”). The modern solution is the data lakehouse, which combines the cost-efficiency and flexibility of a data lake with the management and ACID transactions of a data warehouse. This architecture is ideal for the diverse data types consumed by AI.

- Cloud-First and Multi-Cloud Strategy: The scalability and advanced AI/ML services offered by major US cloud providers (AWS, Microsoft Azure, Google Cloud) make a cloud-first approach almost mandatory. Furthermore, a multi-cloud strategy can prevent vendor lock-in, optimize costs, and enhance resilience.

- The Shift to Real-Time Stream Processing: Batch processing, where data is moved and processed in scheduled chunks, is too slow for many modern AI applications. Real-time stream processing platforms (e.g., Apache Kafka, Apache Flink) allow data to be analyzed the moment it is generated, enabling AI-driven real-time fraud detection, dynamic pricing, and immediate customer service interventions.

Pillar 4: Data Culture and Literacy – The Human Element

Technology and processes are worthless without the people to use them effectively. The AI revolution demands a fundamental shift in organizational culture. Data can no longer be the sole domain of data scientists and IT specialists. It must become a shared language and a shared responsibility.

Key Components for the US Context:

- Democratizing Data Access (Safely): Employees across the organization, from marketing to HR to operations, need access to data to solve problems with AI. This is enabled through modern data catalogs and self-service analytics platforms that provide a “shopfront” for approved, high-quality data assets, allowing non-technical users to find and use data without compromising security.

- Upskilling for the AI Age: A massive upskilling effort is required. This involves two tracks:

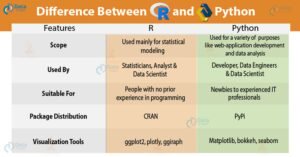

- Technical Upskilling: Training data engineers on modern cloud platforms and data scientists on MLOps (Machine Learning Operations) to productionize models.

- Business Upskilling: Teaching business leaders and frontline employees how to formulate problems for AI to solve, how to interpret AI-driven insights, and how to understand the limitations and risks of AI tools.

- Leadership from the Top: The CEO and board must be the chief evangelists for the data-driven culture. They must champion data initiatives, invest in literacy programs, and lead by example by using data and AI in their own strategic decision-making.

The Unique US Landscape: Opportunities and Headwinds

The United States presents a distinct environment for this transformation, characterized by immense opportunity and significant challenges.

Opportunities:

- Capital and Investment: Access to deep venture capital and private equity markets provides the fuel for significant investment in data and AI infrastructure.

- World-Leading Tech Ecosystem: Proximity to and partnerships with the world’s leading technology companies and cloud providers facilitate access to cutting-edge tools and expertise.

- A Culture of Innovation: A historical willingness to embrace disruptive technologies and a high tolerance for calculated risk can accelerate adoption.

Headwinds and Challenges:

- The Legacy System Quagmire: Many large, established US corporations are burdened by decades-old legacy systems (mainframes, siloed databases) that are expensive to maintain and incredibly difficult to integrate into a modern data architecture.

- A Fragmented Regulatory Environment: The absence of a comprehensive federal data privacy law (like the EU’s GDPR) creates a complex patchwork of state-level regulations. Navigating the CCPA in California, the CPA in Colorado, and other emerging laws adds significant compliance overhead for national companies.

- The Talent War: The competition for skilled data engineers, data scientists, and AI specialists is ferocious, driving up costs and making it difficult for non-tech companies to build in-house capabilities.

Read more: ESG in the USA: A Performance and Risk Analysis of Sustainable ETFs vs. Traditional Benchmarks

A Practical Roadmap: From Aspiration to Implementation

Knowing the pillars is one thing; building them is another. Here is a phased approach to evolving your data strategy for AI.

Phase 1: Assess and Align (Months 1-3)

- Conduct a Data Maturity Audit: Honestly assess your current state. What is the quality of your core data assets? How effective is your current governance? What is your architectural debt?

- Identify High-Value, Low-Risk AI Use Cases: Don’t boil the ocean. Work with business leaders to identify 2-3 pilot projects that have clear business value and manageable data requirements (e.g., an AI-powered customer service chatbot, a predictive maintenance model for manufacturing).

- Secure Executive Sponsorship: Present a compelling business case for the strategic investment, tying it directly to revenue growth, cost savings, or risk mitigation.

Phase 2: Foundation and Pilot (Months 4-12)

- Establish the Core Governance Framework: Form a data governance council. Define initial data quality standards and ownership for the data domains critical to your pilot projects.

- Modernize Key Parts of Your Architecture: Begin migrating the data for your pilot projects to a modern cloud platform. Implement a data catalog to start creating an inventory of trusted data.

- Execute the Pilots and Measure ROI: Run your AI pilots aggressively. The goal is not just to test the technology, but to test the entire data supply chain—from ingestion to insight. Measure the results meticulously to build momentum for further investment.

Phase 3: Scale and Operationalize (Year 2 and Beyond)

- Expand the Governance Model: Scale the federated governance model to cover more data domains across the enterprise.

- Industrialize the Data Pipeline: Build out robust, automated data pipelines to feed a growing portfolio of AI applications.

- Embed AI and Data Literacy: Launch formal upskilling programs and integrate data-driven decision-making into corporate KPIs and performance reviews.

Conclusion: The Time for Strategic Action is Now

The AI revolution is not a future event; it is unfolding in real-time. For US businesses, it represents the most significant wave of technological change since the advent of the internet. The organizations that will emerge as leaders in this new era will not necessarily be those with the most advanced AI algorithms, but those with the most robust, trustworthy, and scalable data foundations.

The journey to an AI-ready data strategy is complex and requires sustained commitment, investment, and cultural change. It demands that we move data from the background to the foreground of strategic planning. The question posed in the title, “Is Your Data Strategy Ready for the AI Revolution?” is the most critical question a US business leader can ask today. The future of your competitive advantage, your operational resilience, and your very relevance may depend on the answer. The time to build your foundation is now, before the wave crashes, and the opportunity is lost.

Read more: Energy Transition in the USA: An Equity Analysis of Oil & Gas vs. Renewable Energy Companies

FAQ Section

Q1: We have a data warehouse and some BI tools. Isn’t that enough for AI?

A: While a data warehouse and Business Intelligence (BI) tools are excellent for descriptive analytics (telling you what happened), they are often insufficient for AI. AI, especially generative AI and machine learning, requires access to vast amounts of both structured and unstructured data (e.g., text, images, logs) in a flexible environment. Traditional data warehouses can be expensive and rigid for this purpose. The modern data lakehouse architecture is better suited to serve the diverse and demanding data needs of AI.

Q2: How much will it cost to make our data strategy AI-ready?

A: The cost is significant but should be viewed as a strategic investment, not just an IT expense. Costs include cloud infrastructure and services, new software tools (for data quality, cataloging, etc.), and, most importantly, talent acquisition and upskilling. However, the cost of inaction is likely far higher—in the form of missed opportunities, operational inefficiencies, and competitive irrelevance. A phased, use-case-driven approach helps manage costs and demonstrate ROI early.

Q3: With the rapid advancement of AI, won’t the tools just get easier to use, reducing the need for a complex data strategy?

A: While AI tools are indeed becoming more user-friendly (e.g., no-code AI platforms), this actually increases the importance of a solid data strategy. Easier-to-use tools mean more people across the organization will be building AI solutions. Without a strong governance framework, this leads to “shadow AI”—a proliferation of ungoverned, potentially risky AI models built on faulty data. The strategy ensures that this democratization happens safely and effectively.

Q4: How do we handle the ethical concerns and potential bias in our AI models?

A: This starts with your data. Proactive bias detection and mitigation are core components of an AI-ready data strategy. This involves:

- Diverse Data Sourcing: Ensuring your training data is representative of the populations you serve.

- Bias Auditing: Using technical tools to statistically analyze datasets for proxies of bias (e.g., zip codes correlating with race).

- Explainability (XAI): Implementing tools and practices that help explain why an AI model made a particular decision.

- Human-in-the-Loop: Maintaining human oversight for high-stakes decisions and using human reviewers to continuously monitor for biased outcomes.

Q5: We’re not a tech company. How can we possibly compete for the specialized data and AI talent we need?

A: The talent war is real, but there are strategies beyond just hiring:

- Upskill from Within: Invest in training your existing, loyal employees—those with domain knowledge of your business—in data engineering and data science skills. They often provide more value than an external hire who lacks industry context.

- Leverage Managed Services: Use cloud AI services (e.g., Azure OpenAI, AWS SageMaker, Google Vertex AI) that abstract away much of the underlying complexity, reducing the need for deep, specialized expertise for every project.

- Focus on Culture: Create a mission-driven, innovative culture that attracts talent looking to solve meaningful problems, even if you can’t match the salary of a FAANG company.

Q6: Given the lack of a federal US data privacy law, how should we approach compliance?

A: In the absence of a single federal standard, the most prudent approach is to adhere to the highest common denominator. For many, this means building a data governance program that is compliant with the strictest state law, which is currently the California Consumer Privacy Act (CCPA) as amended by the CPRA. This includes ensuring rights to access, delete, and opt-out of the sale of personal information, and implementing data protection assessments for high-risk activities. A robust, principle-based data strategy will make compliance with any future federal law significantly easier.

Q7: What is the single most important first step we can take next week?

A: Initiate a data quality assessment on a single, critical data asset. Choose one dataset that is fundamental to your business and a likely candidate for an early AI pilot—for example, your primary customer master list. Run it through a data profiling tool (even a simple one) to quantify its quality in terms of completeness, accuracy, and duplication. This concrete, data-driven assessment will provide an undeniable baseline, make the abstract problem of “bad data” tangible, and create the urgent business case for a broader strategic initiative.