If you’re a data analyst in the United States, you’ve felt the shift. The questions from stakeholders are evolving from “What happened?” to “What will happen?” and “What should we do next?” In boardrooms from Silicon Valley to Wall Street, “Machine Learning” and “AI” are the buzzwords du jour, often surrounded by a mystique that makes them seem like magic reserved for Ph.D. data scientists.

It’s time to demystify that.

Think of Machine Learning (ML) not as a magical black box, but as the natural, powerful evolution of the analytical skills you already possess. You already clean data, build regression models, create segmentation with clustering, and interpret results for business value. ML is a suite of tools that automates and scales these processes, allowing you to find deeper patterns and make more accurate predictions from larger, more complex datasets.

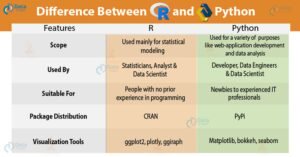

This guide is crafted specifically for you—the US-based analyst. We’ll ground every concept in real-world American business contexts, from retail and finance to healthcare and marketing. We’ll use the tools you’re likely already familiar with, like Python and SQL, and focus on the practical “how” and “why,” not just the theoretical “what.”

By the end of this article, you will have a firm grasp of core ML concepts, understand how to frame business problems as ML problems, and be able to build, validate, and deploy a simple ML model yourself. Let’s begin the journey from data analyst to a data analyst who leverages machine learning.

Part 1: Laying the Foundation – What Machine Learning Really Is

1.1 A Simple Definition

At its core, Machine Learning is the science of getting computers to learn and act without being explicitly programmed for every single rule. Instead, we feed algorithms data, and they learn the underlying patterns and relationships from that data.

A Simple Analogy: Imagine you’re teaching a child to identify a dog. You don’t give them a checklist of 100 rules (has four legs, has fur, barks, etc.). You show them many pictures, saying “this is a dog” and “this is not a dog.” The child’s brain learns the patterns. Machine learning works similarly. We provide a dataset (the “pictures”) and the correct answers (the “labels”), and the algorithm learns the pattern.

1.2 How It Differs from Traditional Analytics

This is a crucial distinction for analysts to understand.

| Traditional Analytics | Machine Learning |

|---|---|

| Goal: Descriptive & Diagnostic (What happened? Why?) | Goal: Predictive & Prescriptive (What will happen? What should we do?) |

| Approach: Rule-based, hypothesis-driven. You query data you have. | Approach: Pattern-based, data-driven. The algorithm finds patterns you might not have hypothesized. |

| Output: Reports, Dashboards, Historical Insights. | Output: Predictive Models, Recommendations, Automated Decisions. |

| Example: A SQL query to find last quarter’s top-selling products. | Example: A model that predicts which customers are most likely to churn next quarter. |

Traditional analytics looks in the rearview mirror. Machine learning helps you see the road ahead.

1.3 The Three Paradigms of Machine Learning

ML can be broadly categorized into three types, each suited for different kinds of tasks.

1. Supervised Learning: Learning with a Labeled Guide

This is the most common and intuitive type. The algorithm is trained on a dataset that includes both the input data and the desired output (the “label” or “answer”).

- Use Case: You have historical data on house prices (the label) along with features like square footage, number of bedrooms, and zip code. You train a model to predict the price of a new house.

- Common Techniques: Linear Regression, Logistic Regression, Decision Trees, Random Forests.

2. Unsupervised Learning: Finding Hidden Structures

Here, the algorithm is given data without any labels. Its goal is to find inherent patterns, groupings, or structures within the data.

- Use Case: Segmenting customers based on purchasing behavior without pre-defining the segments. You don’t know what the segments are; the algorithm finds them.

- Common Techniques: Clustering (K-Means), Dimensionality Reduction (PCA).

3. Reinforcement Learning: Learning by Trial and Error

An agent learns to make decisions by performing actions in an environment to maximize a cumulative reward. It’s like training a dog with treats.

- Use Case: Less common in standard business analytics, but used in areas like robotics, game AI (AlphaGo), and optimizing complex systems like supply chain logistics.

- Common Technique: Q-Learning.

For the remainder of this guide, we will focus primarily on Supervised Learning, as it is the most directly applicable starting point for most analysts.

Part 2: The Machine Learning Project Lifecycle – A Practical Framework

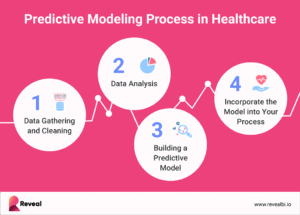

Understanding the end-to-end process is critical. It’s not just about building a model; it’s about solving a business problem. We’ll use the CRISP-DM (Cross-Industry Standard Process for Data Mining) framework, adapted for a modern US business context.

Phase 1: Business Understanding – The “Why”

This is the most critical phase. Without a clear business objective, your ML project is doomed to fail.

- Action: Engage with US stakeholders (Marketing, Operations, Finance) to define the problem.

- Key Questions:

- What decision will this model inform?

- What is the measurable business goal? (e.g., Reduce customer churn by 10%, Increase click-through rate by 5%)

- How will we measure success? (Define KPIs)

- US Business Example: A Midwest retail chain wants to reduce inventory costs. The business problem: “We need to predict demand for products at each store 8 weeks in advance to optimize our supply chain and reduce overstock.”

Phase 2: Data Acquisition & Understanding – The “What”

What data do you need to solve this problem? As a US analyst, you have a wealth of data sources, but also specific legal considerations.

- Action: Identify and collect relevant data.

- Potential Data Sources:

- Internal: Sales transactions, CRM data, website analytics, customer demographics.

- External (US Focus):

- Economic Data: Bureau of Labor Statistics (BLS), Federal Reserve Economic Data (FRED).

- Geospatial Data: US Census Bureau data, Google Maps API.

- Weather Data: National Oceanic and Atmospheric Administration (NOAA).

- Industry-specific: Healthcare (CMS data), Finance (SEC filings).

- EEAT & Legal Consideration: Be acutely aware of data privacy laws like CCPA (California) and sector-specific laws like HIPAA (Healthcare). Ensure you have the right to use the data and are anonymizing where necessary.

Phase 3: Data Preparation – The “Gutting and Cleaning”

This is where analysts spend 70-80% of their time. A model is only as good as the data it’s trained on.

- Action: Clean and transform the raw data into a format suitable for modeling.

- Key Tasks:

- Handling Missing Values: Impute with mean/median, or use algorithms that handle missing data.

- Encoding Categorical Variables: Convert text (e.g., “New York”, “California”) into numbers (One-Hot Encoding).

- Feature Scaling: Normalize or standardize numerical features so one variable doesn’t dominate others.

- Feature Engineering: Create new, more predictive features from existing ones (e.g., from a ‘date’ column, create ‘day_of_week’, ‘is_weekend’, ‘is_holiday’).

Let’s see this in action with Python code. We’ll use pandas, the workhorse library for data manipulation.

python

# Import necessary libraries

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler, OneHotEncoder

from sklearn.impute import SimpleImputer

# Load a sample dataset (e.g., a US customer churn dataset)

# Assume df is our DataFrame with columns like 'Tenure', 'MonthlyCharges', 'TotalCharges', 'InternetService', 'Churn'

# df = pd.read_csv('customer_churn.csv')

# 1. Handle Missing Values in 'TotalCharges'

imputer = SimpleImputer(strategy='median') # Use median to be robust to outliers

df['TotalCharges'] = imputer.fit_transform(df[['TotalCharges']])

# 2. Encode Categorical Variables like 'InternetService'

# One-Hot Encoding creates new binary columns for each category

df_encoded = pd.get_dummies(df, columns=['InternetService'], prefix='IS')

# 3. Encode the target variable 'Churn' (Yes/No) to 1/0

df_encoded['Churn'] = df_encoded['Churn'].map({'Yes': 1, 'No': 0})

# 4. Separate Features (X) and Target (y)

X = df_encoded.drop('Churn', axis=1) # Everything except the Churn column

y = df_encoded['Churn'] # The Churn column

# 5. Split the data into Training and Testing sets (80/20 split)

# This is VITAL to test the model on unseen data.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# 6. Scale the Numerical Features (e.g., 'MonthlyCharges', 'Tenure')

scaler = StandardScaler()

X_train[['MonthlyCharges', 'Tenure']] = scaler.fit_transform(X_train[['MonthlyCharges', 'Tenure']])

X_test[['MonthlyCharges', 'Tenure']] = scaler.transform(X_test[['MonthlyCharges', 'Tenure']])

print("Data Preparation Complete!")

print(f"Training Set Size: {X_train.shape}")

print(f"Testing Set Size: {X_test.shape}")

Phase 4: Modeling – The “Fitting”

Now for the part everyone thinks of: choosing an algorithm and training it.

- Action: Select an appropriate algorithm and train it on your prepared training data.

- Choosing a Model: Start simple. Linear models are interpretable and a good baseline. For more complex patterns, tree-based models like Random Forest or Gradient Boosting are often very effective.

- Key Concept: Train-Test Split: We already did this in the code above. We train the model on one subset of the data (

X_train,y_train) and test its performance on a completely separate, unseen subset (X_test,y_test). This prevents “overfitting,” where a model memorizes the training data but fails on new data.

Let’s train two common models: Logistic Regression (simple) and Random Forest (powerful).

python

# Import model classes

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

# --- Model 1: Logistic Regression ---

logreg_model = LogisticRegression(random_state=42)

logreg_model.fit(X_train, y_train) # Train the model

# Make predictions on the test set

y_pred_logreg = logreg_model.predict(X_test)

# --- Model 2: Random Forest ---

rf_model = RandomForestClassifier(n_estimators=100, random_state=42) # 100 trees

rf_model.fit(X_train, y_train) # Train the model

# Make predictions on the test set

y_pred_rf = rf_model.predict(X_test)

print("Model Training Complete!")

Phase 5: Evaluation – The “Reality Check”

How good is your model? You must evaluate it rigorously against the business objectives defined in Phase 1.

- Action: Use metrics to assess model performance on the test set.

- Key Metrics for Classification (like our Churn example):

- Accuracy: (Correct Predictions) / (Total Predictions). Simple, but can be misleading for imbalanced datasets (e.g., 99% of customers don’t churn).

- Precision: Of the customers we predicted would churn, how many actually did? (Minimizing false alarms).

- Recall: Of all the customers who actually churned, how many did we correctly predict? (Finding all the churners).

- F1-Score: The harmonic mean of Precision and Recall. A single balanced metric.

- Confusion Matrix: A table showing true positives, false positives, true negatives, and false negatives.

python

# Evaluate Logistic Regression

print("--- Logistic Regression Evaluation ---")

print(f"Accuracy: {accuracy_score(y_test, y_pred_logreg):.2f}")

print(classification_report(y_test, y_pred_logreg))

# Evaluate Random Forest

print("\n--- Random Forest Evaluation ---")

print(f"Accuracy: {accuracy_score(y_test, y_pred_rf):.2f}")

print(classification_report(y_test, y_pred_rf))

# The classification report provides Precision, Recall, and F1-Score for each class.

Business Interpretation: If the business goal is to create a targeted retention campaign with a limited budget, Precision is key—you don’t want to waste resources on false alarms. If the goal is to catch every single potential churner at all costs, Recall is more important.

Phase 6: Deployment & Monitoring – The “Launch”

A model in a Jupyter Notebook delivers zero business value. It must be deployed to create impact.

- Action: Integrate the model into a business process and monitor its performance over time.

- Deployment Options:

- Batch Prediction: Run the model nightly/weekly to score customers and update a dashboard or CRM list.

- API Endpoint: Wrap the model in a REST API (using Flask, FastAPI, or cloud services like AWS SageMaker). This allows real-time predictions, e.g., for fraud detection during a transaction.

- Monitoring: Model performance decays over time as data patterns change (this is called “model drift”). You must continuously monitor its accuracy and retrain it with new data periodically.

Read more: From Dashboards to Decisions: The Next Frontier of Data Visualization for American Teams

Part 3: Common Pitfalls and How to Avoid Them

- Garbage In, Garbage Out: The #1 rule. No algorithm can salvage poor-quality data.

- Overfitting: Creating a model that is too complex and memorizes the noise in the training data. Solution: Use train-test split, cross-validation, and simpler models.

- Data Leakage: Accidentally using information from the future or from the test set during training. This creates overly optimistic results. Solution: Be extremely careful during feature engineering and ensure the test set is completely isolated until the final evaluation.

- Ignoring Business Context: Building a technically perfect model that doesn’t solve a real business problem or is too expensive to implement. Solution: Constant communication with stakeholders.

Part 4: Your Path Forward – Building ML Expertise

- Master the Tools: Deepen your knowledge of Python (pandas, scikit-learn), SQL, and get familiar with cloud platforms like AWS, GCP, or Azure.

- Practice, Practice, Practice: Websites like Kaggle offer real-world datasets and competitions. Start with their beginner-friendly “Titanic” and “House Prices” competitions.

- Learn the Theory (Gradually): You don’t need a Ph.D., but understanding the intuition behind key algorithms (like how a decision tree makes a split) will make you a better modeler.

- Focus on Communication: Learn to translate complex model outcomes into actionable business recommendations for US executives. A slide saying “The Random Forest achieved 92% accuracy” is useless. A slide saying “Our model identifies the 5,000 customers most likely to cancel, and a $10 retention offer to them will save an estimated $2M in revenue” is invaluable.

Conclusion

Machine Learning is not a mystical art. It is a logical extension of the data analysis you are already doing. By understanding the core concepts, following a disciplined project lifecycle, and leveraging the powerful tools at your disposal, you can start integrating ML into your work to drive tangible value for your US-based organization.

The journey begins by reframing the next business problem not as a request for a report, but as a question: “Can we predict this?” Start small, be methodical, and build from there. The future of American business will be built on data, and you are now equipped to be one of its architects.

Read more: Python vs. R for Data Analysis: Which Skill is More Valuable for US Jobs?

Frequently Asked Questions (FAQ)

Q1: I’m an Excel/SQL analyst. Do I need to learn Python to do Machine Learning?

While it’s possible to do some basic ML in other tools (like Power BI), Python (with libraries like scikit-learn) is the industry standard for its flexibility, power, and vast ecosystem. It is highly recommended for anyone serious about a career in data. The learning curve is manageable, especially for someone already skilled in logical thinking.

Q2: How much math do I really need to know?

For a practical, applied analyst, you need more conceptual intuition than advanced math. You should understand concepts like:

- What a “average” and “standard deviation” is.

- The intuition behind finding a “line of best fit” (linear regression).

- The idea of optimizing for a goal (minimizing error).

You do not need to manually calculate derivatives or linear algebra equations—libraries handle that.

Q3: My company’s data is messy. Is ML even possible for us?

Absolutely. In fact, the vast majority of real-world corporate data is messy. The process of cleaning and preparing this data (Phase 3) is the first and most critical step. Starting an ML project can often be the catalyst a company needs to improve its overall data governance.

Q4: What’s the difference between AI and Machine Learning?

Think of it as a nested relationship.

- Artificial Intelligence (AI): The broadest term, referring to machines performing tasks that typically require human intelligence.

- Machine Learning (ML): A subset of AI. It’s the method by which we achieve AI—by having machines learn from data.

- Deep Learning: A subset of ML using complex “neural networks” with many layers, excellent for tasks like image and speech recognition.

Q5: How do I convince my US manager to invest in an ML project?

Frame it in terms of ROI and risk reduction, not technology.

- Do: “This model will help us prioritize our sales leads, allowing the team to focus on the 20% of accounts with an 80% chance of converting, potentially increasing sales by $X.”

- Don’t: “I want to use a Random Forest classifier.”

Q6: Are there ethical concerns with ML I should be aware of?

Yes, and this is critical. Models can perpetuate and even amplify existing biases in the data. For example, a hiring model trained on historical data from a company that historically favored one demographic over another could learn to do the same. It is your ethical and professional responsibility to test for and mitigate bias in your models.